Redundancy and scalability are important for VoIP systems. Virtualization makes both of these easier, less costly, and more efficient. Coupled with cloud services, virtualization has revolutionized how network services are provided, and in particular, how VoIP deployment is executed.

In this article, we explain what virtualization is, the trends governing its newest technologies, and how all of this can be leveraged for use with VoIP services.

Advantages for VoIP systems

VoIP systems require a high level of redundancy to maintain the reliability of telephony services that businesses depend on. SIP servers can be run as virtual entities, rather than stand-alone equipment, to provide call control to any number of IP phones. Virtualization makes implementing that redundancy easy by simply creating additional SIP servers that will function in a high-availability arrangement. If one server fails, the other will take over. And the distributed nature of virtualization, where multiple pieces of hardware support these virtual machines (VMs), make a failure in memory or CPU more of a nuisance than a catastrophe. If any single module of a virtualization platform fails, you simply hot-swap it with another without a single second of downtime.

Scalability is also important for VoIP systems. Many businesses start off with a few IP phones but quickly increase in size. Takeovers of other companies can double or triple the telephony requirements of an enterprise. With virtualization, the increased computing power demand on the SIP server can easily be accommodated by simply increasing available system resources on the VMs in question.

The problems with non-virtualized servers

In a traditional data center, you typically have a physical server with CPUs, memory, hard drive space, and several other peripherals that run an operating system, on top of which one or more network services operate. For example, you may have a server running Microsoft Windows Server 2019, on top of which you run a web server such as Apache, and an email server such as Microsoft Exchange. This physical box is connected to the network and delivers email services as well as web services to attached networks.

Although all of this sounds normal, it is extremely inefficient. This is because over the course of a day, the average resource usage on that server is extremely low. For example, for the vast majority of the time, CPU usage is well below 10%, memory usage may be somewhere around 50% or less, network bandwidth is much lower than the 1 Gbps or 10 Gbps available on the network interface, and there is always at least several tens of gigabytes of hard drive space that is free. Looking at it another way, 90% of the CPU power, over 50% of memory, a large portion of the network bandwidth, and many gigabytes of space remain unused. Since CPU, memory, network capacity, and hard drive space all cost money, a large percentage of the company's investment remains idle.

In addition, a physical server requires rack space, redundant electrical power, cooling systems, and maintenance, all of which adds to the cost. Furthermore, if the server needs an upgrade due to increased demand or failure, this upgrade must be scheduled, costs money, and will probably require some downtime to implement.

Now imagine that your enterprise network makes use of several servers, maybe 10 or 20, to run all of your services. Imagine the amount of CPU, memory, network bandwidth, and hard drive space that is going unused! As the scale increases, the amount of unused resources becomes immense.

Virtualization pools all of these resources into a single repository, and allows servers to access those resources on demand. Such an arrangement is more efficient, since resources are more uniformly (and dynamically) shared among servers, and can be accessed and used as needed.

Introduction to virtualization

Virtualization is not a new concept. It has been around since the 1960s and was introduced as a method of logically dividing the system resources of mainframe computers. Since then, its meaning has broadened greatly to become an indispensable part of modern data centers. Virtualization is continually evolving and constantly providing network service providers and administrators with new tools to quickly and efficiently deploy and administrate online services.

Virtualization allows you to create virtual (as opposed to physical) servers, called virtual machines, or VMs, on which you can run operating systems and network service software, just as you would on a physical server. These VMs “borrow” or seize shared resources as needed from the physical infrastructure on which they run. You can build several tens or hundreds of VMs on a single infrastructure, all of which share a common pool of CPUs, memory, hard drive space, and network bandwidth, making much more efficient use of the available resources compared with traditional servers. You can create these VMs, indicate their specifications, and run them just like you would their physical counterparts.

The physical infrastructure

The concept of virtualization revolves around building a powerful and resource-rich physical infrastructure composed of multiple CPUs, large amounts of memory, large repositories of hard drive space, and high-speed networking connections. This physical infrastructure can be viewed as one gigantic server, but is more often deployed as several distinct units. An example of such a set of devices can be seen below.

Note that these devices are modular in nature, and can be expanded by adding modules as needed. Each module seen above contains several CPUs, 32 GB of memory, and 512 GB of hard drive space. Depending on the specifications of the VMs created, the device pictured above can create over 1000 VMs. Think about the amount of rack space, power, cooling systems, and cabling that are saved compared with traditional servers!

Note that these devices are modular in nature, and can be expanded by adding modules as needed. Each module seen above contains several CPUs, 32 GB of memory, and 512 GB of hard drive space. Depending on the specifications of the VMs created, the device pictured above can create over 1000 VMs. Think about the amount of rack space, power, cooling systems, and cabling that are saved compared with traditional servers!

The virtual platform

To create a VM, the administrator connects to the interface of the platform and makes a server with the appropriate resources. It’s a little like going to the computer store and ordering your server. You specify the number of CPUs and their speeds, amount of memory, hard drive space, and operating system. The server is up and running within minutes and is ready to have additional network software installed.

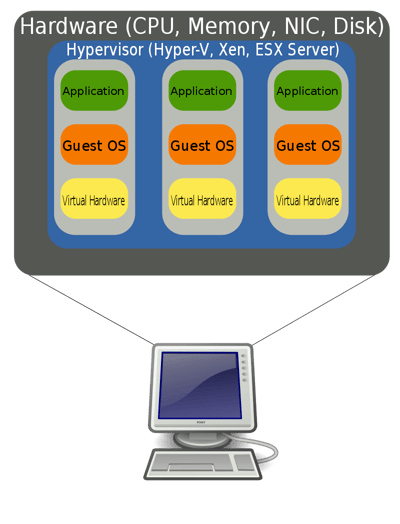

The server itself is created using a software program called a hypervisor. This is a control program that runs on the platform on which you create your VMs. Some well-known hypervisor software packages include Hyper-V, Xen and ESXi, among others. In the hypervisor, you create the virtual hardware and install an OS on each one, known as the guest OS. On each individual VM, you can then install any application you wish. The following diagram conceptualizes the virtual entities as they exist within the physical hardware device.

When creating a VM, it resides on the physical platform, but as a virtual entity, without any indication of which physical CPU or which physical piece of memory is being used. This is managed internally by the platform to provide an efficient and evenly distributed usage of resources.

With virtualization, if you need to upgrade a server, just go in and change the specifications of its hardware. You need a second server for redundancy? Create it on the platform. Do you need to cluster together five servers to be able to respond to the demand of your users? Go in and do so. It’s as simple as clicking, dragging, and dropping the appropriate components into the right box.

Conclusion

Virtualization is arguably one of the most profound innovations that has improved network service provisioning beyond what was thought possible even a decade ago. For VoIP deployments, virtualization makes redundancy and scalability easier, less costly, and more efficient.

You may also like:

Cloud and UC: Is your telephone system on board?

The benefits of integrating the IoT with your VoIP phone system

The advantages of software-defined networking for VoIP

Comments